What do PostgreSQL 9.2, Oracle 12c and MySQL 5.7.8 have in common? They all integrated JSON as a possible data type. Pretty cool, huh?! Actually, I think using JSON in a relational database is one of the worst ideas you can have. Let me explain why.

In this post, I focus on PostgreSQL, as I’m more familiar with it but my arguments also apply for other relational database systems. Before I really get into it, let’s have a look at some basic JSON-related features offered by PostgreSQL.

JSON and PostgreSQL

In this post, I consider a table used to store information about persons. It’s composed of a couple of fields:

CREATE TABLE person ( id serial primary key, firstname text, lastname text, infos json );

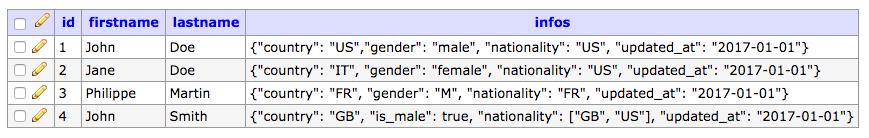

This schema allows the insertion of all sorts of valid JSON data in column infos. So in our case, we could end up with the following rows:

Now, with appropriate functions, you can query this table. For instance, you can retrieve all the persons with their country:

SELECT id, firstname, lastname, infos->>'country' FROM person;

So far so good. Now let’s try to retrieve all the persons with the US nationality:

SELECT id, firstname, lastname FROM person WHERE (infos->'nationality')::jsonb ? 'US';

It gets a bit annoying, as the nationality attribute is sometimes a string, and sometimes an array of strings. But it’s still manageable. We can also retrieve all the men from the table. The query would look something like this:

SELECT id, firstname, lastname FROM person WHERE infos->>'gender' = 'male' OR infos->>'gender' = 'M' OR (infos->>'is_male')::boolean;

Now it’s a mess! And this is because the gender is stored in various forms so you had to look at the data to write the query. This is very unusual, as in a pure relational database you only have to look at the schema. Besides, we were kinda lucky here, as no rows have both gender and is_male attributes with inconsistent values.

At this point, you might be thinking: “Oh but we should be more careful and always consider gender to store the gender (with for instance 0 for males and 1 for females), and always use an array to store nationalities (even if most of the time, there’s only one value)”. This remark makes no sense to me. If you want to put restrictions on JSON values, then consider a proper set of columns, just the way you’d do if JSON was not allowed. In the next section, I try to give stronger arguments to make my point…

So why not using JSON fields?

Actually, the real question should be “Why using JSON?” but some of you might be happy enough with the answer “Because I can, and because it’s handy”. On this point, I agree with this article which states: “Just because you can store JSON, it doesn’t follow you should”.

SQL queries should be – as any other piece of code – readable and maintainable. When an SQL query uses a JSON field, it becomes very tricky to make sure the query is correct, as you can’t rely on meta-data: a key might not exist and data types may vary from a row to another. Even if you make sure your query is correct when you first write it, there is no guarantee that it will still work when new data will be added. For instance, let’s consider the following query:

SELECT id, firstname, lastname, (infos->>'updated_at')::date FROM person;

As expected, the query returns the following data:

Now let’s insert a new row:

INSERT INTO person (firstname, lastname, infos) VALUES (

'Jean-Pierre',

'Jackson',

'{"country": "FR", "gender": "M", "nationality": "FR",

"updated_at": "2017-15-02"}'

);

This insertion works just fine. But can you guess what is going to happen if we execute the previous SELECT query? Well… you’ll get this error:

Error in query: ERROR: date/time field value out of range: "2017-15-02" HINT: Perhaps you need a different "datestyle" setting.

This error is due to the date format: PostgreSQL expect a string following the YYYY-MM-DD format. The format of the attribute updated_at is different: YYYY-DD-MM (which is perfectly fine in JSON). The problem only appeared after the last insertion, as the other rows were all updated on the 1st of January 2017 (in this specific case, YYYY-MM-DD is the same as YYYY-DD-MM). So unless you could check the JSON data before it’s inserted, you cannot guarantee that queries will remain correct in time. You can only agree with me on this one: this is freaking crazy in a relational DB context! This problem would never appear if the attribute updated_at was stored in a Date field.

From a design point of view, using JSON in a relational database is not good either, as it makes the database denormalized. For those who don’t remember, normalisation was introduced by Edgar Codd in the early 70’s. There are several forms of normalisation: first normal form (1NF), 2NF, …, 6NF, BCNF, etc. In practice, the 3NF is considered satisfactory. When a JSON field is present in a relation, the 1NF is not even satisfied, as values are not atomic with respect to the DBMS.

Some people seem to think that using JSON is more prone to evolution, as attributes can be added or removed in the JSON fields without having to change the DB schema. From my point of view, this is a sign of poor design. Thinking JSON will ease the evolution of your database in the future is delusional; it will actually spoil it from day 1.

Finally, some might argue it’s convenient. But in this case, why don’t we just push the concept further and have only 2 fields per relation: an ID (to play the role of primary key) and a JSON field that contains the rest of the data? And even further and have only one JSON field? If this idea makes any sense in your context, then give up relational databases and move on to NoSQL databases. You’re more likely to take the best of these concepts with MongoDB, Cassandra, CouchBase, etc.

Conclusion

In case you didn’t got it by now: I strongly discourage you from adding JSON fields in a relational database. The only situation in which you should consider it is when you don’t have to query the JSON data with SQL (which is a pity). The JSON type will guarantee that the data inserted is correct (from a syntactic point of view) but you should not aim for more. Finally, it’s worth noting that the arguments I present in this post are applicable to other types: XML fields, Array fields (to some extend), etc. So be careful when you use fancy types, and make sure you use them for the right reason.